New Internet protocols

SPDY – QUIC – HTTP/2

HTTP was pioneered by Tim Berners-Lee and other early Interneters in the late 1980s, and it was revised a few times throughout the 1990s, resulting in HTTP/1.1, which came out in 1999. And then after that … nothing. Which is weird because the Internet has changed a lot since 1999.

In 2015 the Internet Engineering Task Force (IETF), finally approved a new protocols to protect traffic and secure internet communications with the goal to keep cyberspace open and free.

The IETF protocol under way is HTTP 2.0, with encryption capability by default. This will optimize web traffic performance and is an overhaul of HTTP 1.1 —the foundation of the World Wide Web. But encryption goes far beyond common email or even websites. With the proliferation of the Internet of Everything (IoE), the attack surface is growing

- HTTP/2 February 2015 IETF steering group announced completion

- Real performance improvement over TCP

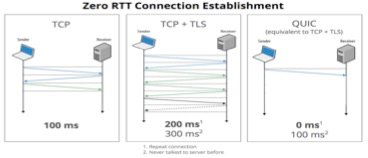

- QUIC’s lower-latency connection establishment

- zero-round-trip connection establishment

- TCP + TLS requires 2 to 3 round trips

- improved congestion control

- better loss recovery

- Sites are loading about 72 percent or three seconds faster across its network on HTTP/2 compared with HTTP/1.1.

- About 2.5 percent of all sites across the Web have currently upgraded to HTTP/2

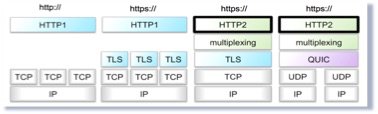

Protocol evolution from SPDY over QUIC to HTTP/2

Protocol for a faster and more secure web

SPDY

SPDY protocol has published 4 draft version 1, 2, respectively, 3, 3.1. The current version 4 is in the experimental stage, but not released, Chromium had some 4 version of the code.

Basic features:

- Multiplexed streams

SPDY allows for unlimited concurrent streams over a single TCP connection. Because requests are interleaved on a single channel, the efficiency of TCP is much higher: fewer network connections need to be made, and fewer, but more densely packed, packets are issued. - Request prioritization

Although unlimited parallel streams solve the serialization problem, they introduce another one: if bandwidth on the channel is constrained, the client may block requests for fear of clogging the channel. To overcome this problem, SPDY implements request priorities: the client can request as many items as it wants from the server, and assign a priority to each request. This prevents the network channel from being congested with non-critical resources when a high priority request is pending. - HTTP header compression

SPDY compresses request and response HTTP headers, resulting in fewer packets and fewer bytes transmitted

Advantages:

In addition, SPDY provides an advanced feature, server-initiated streams. Server-initiated streams can be used to deliver content to the client without the client needing to ask for it. This option is configurable by the web developer in two ways:

- Server push

SPDY experiments with an option for servers to push data to clients via the X-Associated-Content header. This header informs the client that the server is pushing a resource to the client before the client has asked for it. For initial-page downloads (e.g. the first time a user visits a site), this can vastly enhance the user experience. - Server hint

Rather than automatically pushing resources to the client, the server uses the X-Subresources header to suggest to the client that it should ask for specific resources, in cases where the server knows in advance of the client that those resources will be needed. However, the server will still wait for the client request before sending the content. Over slow links, this option can reduce the time it takes for a client to discover it needs a resource by hundreds of milliseconds, and may be better for non-initial page loads.

Shortcomings:

- Single connection blocking by TCP thread (head-of-line blocking) characteristics and transmission speed is limited.

- There may be loss of plus, its negative influence over compression head and priority benefits of control.

- As a result of these shortcomings, SPDY in small website (resource file number) of the effect is not obvious, there may be many more slowly than concurrent connections.

- Gave birth to the QUIC

QUIC

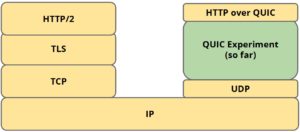

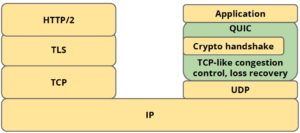

QUIC – is the name for a new experimental protocol, and it stands for Quick UDP Internet Connection. The protocol supports a set multiplexed connections over UDP, and was designed to provide security protection equivalent to TLS/SSL, along with reduced connection and transport latency.

Developed by Google, the outline design document on the Google Docs , still continue to be updated by Google Research. Detailed design document transmission format and Internet Engineering Task Force.

Basic features:

Summary of design documents from the TCP/UDP characteristics, network security and other considerations,.

A lot of design ideas addressing the 4 disadvantages of SPDY:

- A single packet loss (packet) will block the whole flow(stream).

- TCP congestion avoidance mechanisms do not, lead to bandwidth reduction and serialization latency overhead.

- TLS session reconnected waiting time overhead. Holding a mobile phone for additional Round Trip.

- TLS decryption overhead. The first packet must wait behind the packet arrival can decrypt.

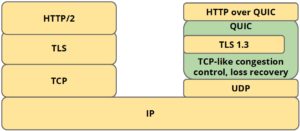

You can think of the QUIC is to solve the bottleneck of SPDY met in TCP and in UDP do exploration of the scheme. The reference SPDY to understand, can be considered to transfer the contents of QUIC is divided into two layers, the top is similar to the SPDY, the lower layer is the encryption process imitation implemented on the UDP TCP connection oriented characteristics and reliability with similar TLS.

What are some of the distinctive techniques being tested in QUIC?

QUIC will employ bandwidth estimation in each direction into congestion avoidance, and then pace packet transmissions evenly to reduce packet loss. It will also use packet-level error correction codes to reduce the need to retransmit lost packet data. QUIC aligns cryptographic block boundaries with packet boundaries, so that packet loss impact is further contained.

Doesn’t SPDY already provide multiplexed connections over SSL?

Yes, but SPDY currently runs across TCP, and that induces some undesirable latency costs (even though SPDY is already producing lower latency results than traditional HTTP over TCP).

Why isn’t SPDY over TCP good enough?

A single lost packet in an underlying TCP connection stalls all of the multiplexed SPDY streams over that connection. By comparison, a single lost packet for X parallel HTTP connections will only stall 1 out of X connections. With UDP, QUIC can support out-of-order delivery, so that a lost packet will typically impact (stall) at most one stream. TCP’s congestion avoidance via a single congestion window also puts SPDY at a disadvantage over TCP when compared to several HTTP connections, each with a separate congestion window. Separate congestion windows are not impacted as much by a packet loss, and we hope that QUIC will be able to more equitably handle congestion for a set of multiplexed connections.

Are there any other reasons why TCP isn’t good enough?

TCP, and TLS/SSL, routinely require one or more round trip times (RTTs) during connection establishment. We’re hopeful that QUIC can commonly reduce connection costs towards zero RTTs. (i.e., send hello, and then send data request without waiting).

Why can’t you just evolve and improve TCP under SPDY?

That is our goal. TCP support is built into the kernel of operating systems. Considering how slowly users around the world upgrade their OS, it is unlikely to see significant adoption of client-side TCP changes in less than 5-15 years. QUIC allows us to test and experiment with new ideas, and to get results sooner. We are hopeful that QUIC features will migrate into TCP and TLS if they prove effective.

Why didn’t you build a whole new protocol, rather than using UDP?

Middle boxes on the Internet today will generally block traffic unless it is TCP or UDP traffic. Since we couldn’t significantly modify TCP, we had to use UDP. UDP is used today by many game systems, as well as VOIP and streaming video, so its use seems plausible.

Why does QUIC always require encryption of the entire channel?

As we learned with SPDY and other protocols, if we don’t encrypt the traffic, then middle boxes are guaranteed to (wittingly, or unwittingly) corrupt the transmissions when they try to “helpfully” filter or “improve” the traffic.

UDP doesn’t have congestion control, so won’t QUIC cause Internet collapse if widely adopted?

QUIC employs congestion controls, just as it employs automatic retransmission to support reliable transport. QUIC will attempt to be fair with competing TCP traffic. For instance, when conveying Q multiplexed flows, and sharing bandwidth with T concurrent TCP flows, we will try to use resources in the range of Q / (Q+T) bandwidth (i.e., “a fair share” for Q additional flows).

Why didn’t you use existing standards such as SCTP over DTLS?

QUIC incorporates many techniques in an effort to reduce latency. SCTP and DTLS were not designed to minimize latency, and this is significantly apparent even during the connection establishment phases. Several of the techniques that QUIC is experimenting with would be difficult technically to incorporate into existing standards. As an example, each of these other protocols require several round trips to establish a connection, which is at odds with our target of 0-RTT connectivity overhead.

Advantages:

- Multiplexing

The QUIC multiplexing feature has been inherited from SPDY and provides:- Prioritization among QUIC streams.

- Traffic bundling over the same UDP connection.

- Compression of HTTP headers over the same connection.

- Connection startup latency and security

The time required to setup a TCP connection is one RTT for a handshake and at least one extra RTT or two in the case of an encrypted connection over TLS. When QUIC is used the time taken to set up a connection is at most one RTT. Here, the startup latency takes zero RTT even in the case of an encrypted connection. QUIC-Crypto decrypts packets independently and avoids serialized decoding dependency, which would damage QUIC’s ability to provide out of order delivery to reduce the HOL. - Forward Error Correction

The Forward Error Correction module comes with packet losses. The module is particularly effective in reducing HOL over a single QUIC stream by promptly recovering a lost packet, especially in the case of high RTT where retransmissions can considerably affect the HOL latency. - Pluggable Congestion Control

QUIC has been designed to support two congestion control algorithms. The first is an implementation of the TCP CUBIC and the second is pacing-based congestion control algorithm that computes the application sending rate based on an estimate of the relative forward delay defined as the difference between the inter-arrival time of two consecutive data packets at the receiver and the inter-departure time of the same packets at the sender. - Connection Identifier

A QUIC connection is uniquely identified by a CID (Connection Identifier) at the application layer and not by the pairs of IP addresses and port number. The first advantage is that since CIDs are not based on IP addresses, any handover between two networks can be transparently handled by QUIC without re-establishing the connection. Moreover, the CID is useful in the case of NAT unbinding and while restoring a connection, a new pair of IP addresses is typically required.

Shortcomings:

- It’s UDP:

QUIC bundles streams over the same UDP connection and just UDP alone already had been an ongoing challenge for middle boxes (firewalls, DPI and NAT engines) and have contributed to the inability of the Internet to evolve. [I-D.hildebrand-middlebox-erosion]

Currently QUIC can not be distinguished from non-QUIC UDP traffic, so networks can not defend themselves from attack and networks can not defend hosts from attack. It is obvious that is a problem if we can’t distinguish QUIC traffic from attack traffic, such as DoS/DDoS.

There is a strong desire to avoid this ossification with QUIC. At the same time, there is a desire to treat QUIC better than normal UDP traffic; that is, to treat QUIC as well as TCP traffic. Unfortunately, the lack of header information in QUIC prevents the network path from identifying QUIC traffic and prevents the path from treating QUIC as a transport protocol on par with TCP. - Consent to Receive and Rate Limiting:

On many networks UDP is rate-limited or completely blocked, or a per-host or per-link basis. The limits are imposed to prevent compromised hosts from generating high volumes of UDP traffic towards a victim [I-D.byrne-opsec-udp-advisory].

Some protocols are request/response and could have higher rate limits because consent to receive is visible to the path (e.g., DNS, NTP) but others are send-only (e.g., SNMP traps, SYSLOG). The configuration expense and fear of ossification involved in deeper packet inspection is not commensurate with the benefit of higher rate limits for those request/response protocols, so many networks simply rate limit or block UDP. - ICMP

As of today the QUIC protocol document says nothing about ICMP. So one can only guess what QUIC implementations will do.

If ICMP is ignored, the middle-box can corrupt, delay, or rate-limit (including rate limiting to 0 bytes per day, a.k.a. ‘drop’).

If ICMP is received, validated, and handled, the endpoint can more quickly react to the block (or path MTU problem, or whatever the ICMP said).

Many fortune 1000 companies block bi-directional UDP traffic. Which means QUIC is blocked also. If the QUIC stack ignores ICMPs, the firewalls can’t help the QUIC stack fall back to TCP quickly, harming user experience — or requiring more aggressive “Happy Eyeballs” timers (doubling network traffic when trying both QUIC and TCP). - Association with Existing Consent

Once a consent to receive is established, multiple packets will usually be received in response to a single request. In TCP, both the 5-tuple and the sequence numbers on a given packet are used to provide hints to the path about this association, in an attempt make the job of off-path attackers more difficult. QUIC does not allow the path to associate packets with a consent at greater assurance therefore the network cannot filter attacks such as denials of service. - Identification

The externally-visible QUIC version number is useful for future protocol agility. However, as this is visible to the path, it is likely to ossify around that value. Thus, having something else to identify QUIC is useful, so that the version number can change while retaining the same identification of a QUIC packet. Therefore a path-visible mechanism to identify a QUIC packet. as also a path-invisible version number would be needed. - Spurious Packets

A spurious packet may arrive when an endpoint (client or server):- loses state due to a reboot

- experiences a QUIC application crash

- acquires another host’s prior IP address

- receives a malicious or accidental QUIC packet.

- In those cases, the host might have a QUIC application listening on that port, a non-QUIC application listening on that port, or no application listening on that port.

- Path State Loss

If a firewall, NAT, or load balancer discards its mapping state without notifying the endpoint, both endpoints can take a long time to discover the path state has been lost. To avoid this delay, it is desirable to send a signal that the path state will be lost or has been lost. QUIC in the current state does not provide a way for on-path middleboxes to signal that their mapping will be lost or has been lost.

This section is courtesy of Dan Wing and Joe Hildebrand.

For more detailed reading please have a look at: draft-wing-quic-network-req-00

HTTP/2

HTTP/2 quasi draft Eleventh Edition In the March 17, 2014 update .

HTTP/2 by the organization for standardization to develop, is based on the SPDY, the difference is:

- Increasing the mechanism of HTTP/1.1 Upgrade, HTTP/2 can be used directly in TCP, unlike SPDY, must be in TLS.

- HTTPS using the NPN connection specification version ALPN (Applcation Layer Protocol Negociation).

- Discuss and confirm the process more perfect agreement.

- Better Server Push process.

- Increase in the types of control frames, and the frame format will consider more carefully.

- A new algorithm of HPACK SPDY header block special compression.

HTTP/2 can be used with HTTP or HTTPS scheme as URL.

When using HTTP scheme, client HTTP/1.1 first request to server, but to join the header Upgrade and HTTP2-Settings

How to h2 in apache:

Whether it is HTTP or SPDY

in a loading process needs to bear all the responsibilities of each thin layer. In the code, if HTTP and SPDY have different responsibilities, need to design the base class, HTTP and SPDY each inherits from a base class to achieve different process.

SPDY special implementation responsibilities:

- The Callback callback mechanism. SPDY HTTP header is compressed, with the ordinary HTTP process docking, or first decompression, either by callback decompression.

- The Protocol Transport protocol process control. Especially the Server Push characteristics.

- Error error handling

- Full duplex SPDY. SPDY socket is a full duplex applications, both the sending and receiving, and general HTTP to send after receiving different.

- Framing binding. This layer of most of them and HTTP.

- SSL/TLS handshake handshake process. Because SPDY is NPN.

- Spdy Connection

- Connection generally distinguished by URL scheme, host, port

- With SPDY and HTTPS these distinguish points are all the same

- Multiplexing connections need to increase protocol to distinguish.

In addition to the Chromium itself, the SPDY document also lists several implementation. Other implementations of C/C++, have one thing in common: because of working on the bottom, rely on more external library code. And they spent the last three months and update, the majority did not support all the features of SPDY, and in bug repair. So the degree of perfection of the code are not up to standard browser level.

The 6 site is the support for SPDY

No necessary to support SPDY, HTTP/2 and QUIC.

Reason:

- SPDY is a standard company, is not the industry standard, defect, perfect.

- SPDY will be accepted and perfect become the industry standard and international standard, then support is not late. HTTP/2 protocol is based on the SPDY, and the HTTP/2 is better than SPDY, SPDY will quit the stage of history sooner or later, then the industry will be a lot of support HTTP/2.

- Server end itself on the SPDY support is not perfect, not fully realize all the characteristics, and the presence of bug. Web site to use if there are certain risks. Apache2.2 + mod_spdy only supports SPDY3, Nginx1.5.1 only supports SPDY3.1, does not implement the Server Push. When the Server client program more perfect do not later than. Server application fee is not a beginning to support SPDY, when they began to support, can be thought of as a symbol, representing the industry will start to do a lot of matching things to support the new standard.

- The Server end is not universal, scanty website has been applied.

- Support SPDY browser in the Chinese share less than 50%, the website is not urgent necessary to support SPDY. Safari does not support SPDY, which is not approved by Apple.

HTTP/2, SPDY, and QUIC with Ilya Grigorik

In the sixth episode of this podcast, your hosts Francesc and Mark interview Ilya Grigorik, Developer Advocate at Google.

About Ilya:

Ilya is a web performance engineer at Google; co-chair of W3C Webperf WG and in short, an internet plumber.